A really cool aspect of my major is that we get to build Lego robots in a third-year core lab course. So, essentially, our project is to code and actually have fun doing it, and while (ugh) I understand other normal courses are slightly more “practical” in the sense that, for example, we’re expected to implement a boring word-sorting algorithm for a programming project, this course wins hard in comparison. Word-sorting algorithms: I love you, but you aren’t made of colourful blocks that bring me back to my 6-year-old joys and wonderments. Okay – that was a blatant lie: the same friend that bought me my first serious bottle of wine also bought me my first lego set for my 18th birthday. My mum’s cleanliness was driven into my brain when I was a young kid and I refused to play with Lego since the 1st grade because all the other dirty kids played with them.

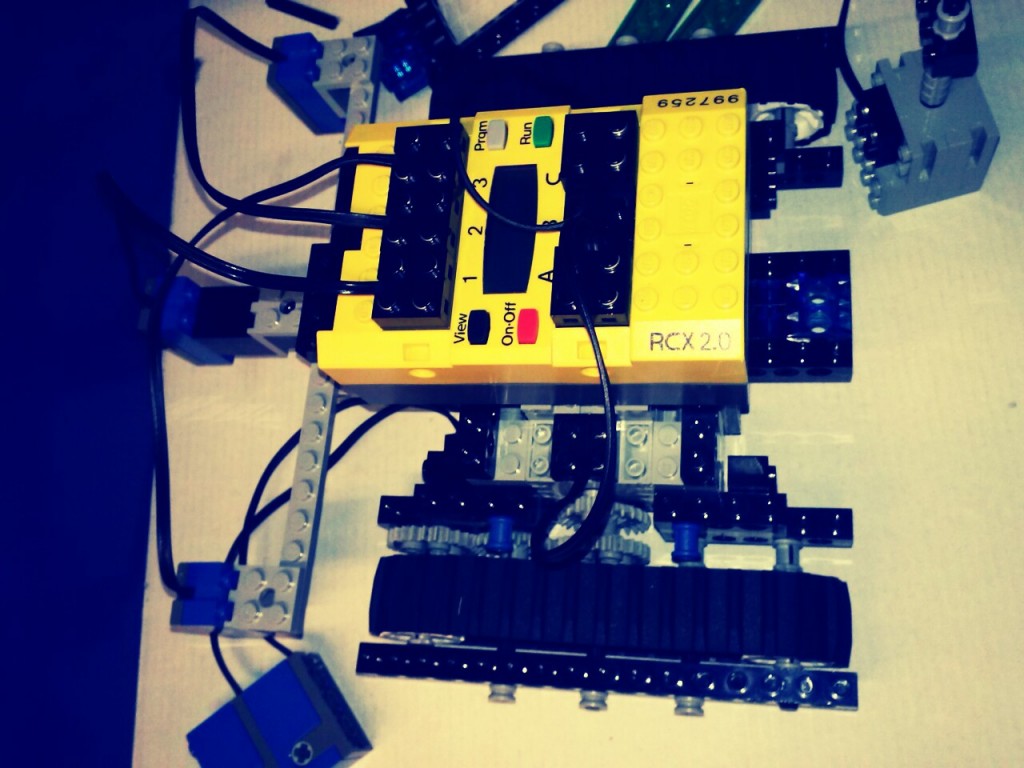

The first big project of this course involved four groups having to, without collisions, make it such that our four robot Lego vehicles crossed a four-way intersection. That went well. My group was great. We did have this sort of pre-project on the first day where we coded a robot that was light-seeking (in a simple Braitenberg sense, that goes towards light and then stops after receding into darkness).

For the second part of the project, four groups had to create their own robot that could traverse a maze, pass three checkpoints in order, and make it to the finish line in 10 minutes. The presentation was only 20 percent of the project grade (the rest for the subsequent report), with an increasing mark depending on how far you got in the maze. The walls were made out of masking tape, because the sensors used to detect the environment were light-sensitive, and through this and other stipulations (e.g. no hard-coding), we were expected to build a suitable robot and write code for it. Seriously? I can’t believe this is a class. It’s like an engineering robotics lab course without all the math.

While fun, there are obviously some (hidden) learning objectives, like exploring the idea of creating or coding an autonomous agent and seeing how it interacts with its environment, and what factors matter. I.e. is it a deterministic environment? Is it a complex environment? Will our agent have to “learn” information? How should we physically implement our robot? Why is there a tampon under the one of the lab tables that everyone pretends to never have seen because no one wants to throw it away?

This course – and my major, for that matter – differ from computer science courses that teach you the details of artificial intelligence and human-computer interaction. This course attempts to bridge disciplines (computer science, psychology, linguistics, philosophy) at a comparatively higher level. For example, we will have experimental lecture topics subjects, such as: linguistic or conversational game theory, bounded rationality, Bayesian networks, the evolution of ethics, or killer robots (of course, the one lecture I had to miss for other stupid things). On the other hand, and if I understand correctly, I would assume that a computer science-centric artificial intelligence class would be based on implementing such a system through abstract data structures – like efficiently prioritizing learned data through heaps or some detailed bullshit I can’t pretend to be able to describe right now. Although I’m defs looking forward to taking that course.

I very much like my major. Is it the most practical? Maybe not, but it’s essentially the Malcolm Gladwell of UBC majors: novel, integrated, and experimental enough that it’s fun; but scientific and relevant enough that it’s applicable and important, with a strong flair of cutting-edge subjects. There are, of course, lows – and I’ve had my share of drifting off in class (who hasn’t?), not to mention the fact that most assigned readings are dry (yet admittedly, awesomely (over)informative) research articles – but I still love the experimental nature of it all. I’m also in the co-op program, which means that I get to go for work experience for a couple of terms in between studying for my degree. So that’s cool too.

It’s weird how a lot of people ask how my major and my interest in alcohol come together, and I can’t say I haven’t thought of it myself. I’ve attempted to code a chatterbot that suggests wines to the user based on linguistically natural input. Still a work in progress, and a project such as this can arguably always be improved in a comparatively obvious sense, so it’s always fun trying to find ways to improve it code-wise, while also being able to bring some wine knowledge to the program’s database. A lot of the subject matter we also learn in Cognitive Systems also has to do with perception and the way we or other agents interpret information from the environment. Of course, we view this idea at a high level – we may look at how we intuitively process information or make decisions based on predicted utility. But I’d personally like to look at perception (and perhaps decision-making) at a deeper level, and perhaps explore the idea of (artificial) sensation as it pertains to Cognitive Systems; that is, it would be cool to study and explore the the sense of smell, sight, or taste etc. not only from a biopsychological perspective, but from a Cognitive Systems structure as well. This is obviously a stretch, but what isn’t, in this course, you know?

Pair killer robots with Sancerre, Menetou-Salon, or some Touriga Nacional-based Portuguese blend. Oh yes. I went there.